![\includegraphics[width=\textwidth]{system_overview}](img2.png)

Environment perception is a basic problem in the design of autonomous mobile cognitive systems, i.e., of a mobile robot. A crucial part of the perception is to learn, detect, localize and recognize objects, which has to be done with limited resources. The performance of such a robot highly depends on the accuracy and reliability of its percepts and on the computational effort of the involved interpretation process. Precise localization of objects is the all-dominant step in any navigation or manipulation task.

This paper proposes a new method for the learning, fast detection

and localization of instances of 3D object classes. The approach

uses 3D laser range and reflectance data acquired by an

autonomous mobile robot to perceive the 3D objects. The 3D range

and reflectance data are transformed into images by off-screen

rendering. Based on the ideas of Viola and Jones

[25], we built a cascade of classifiers, i.e., a

linear decision tree. The classifiers are composed of

classification and regression trees (CARTs) and model the objects

with their view dependencies. Each CART makes its decisions based

on feature classifiers and learned return values. The features

are edge, line, center surround, or rotated features. Lienhart

et. al and Viola and Jones have implemented a method for

computing effectivly these features using an intermediate

representation, namely, integral image

[12,25]. For learning object classes, a

boosting technique, particularly, Ada Boost, is used

[6]. After detection, the object is localized

using a matching technique. Hereby the pose is determined with

six degrees of freedom, i.e., with respect to the ![]() ,

, ![]() , and

, and

![]() positions and the roll, yaw and pitch angles. Finally the

quality of the object localization is evaluated by fast

subsampling of the scanned 3D data. The resulting approach for

object detection is reliable and real-time capable and combines

recent results in computer vision with the emerging technology of

3D laser scanners. Fig.

positions and the roll, yaw and pitch angles. Finally the

quality of the object localization is evaluated by fast

subsampling of the scanned 3D data. The resulting approach for

object detection is reliable and real-time capable and combines

recent results in computer vision with the emerging technology of

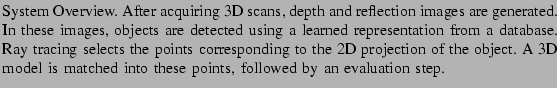

3D laser scanners. Fig. ![]() gives an overview of the

implemented system.

gives an overview of the

implemented system.