|

ICP algorithms tend to have problems if too many points are chosen from featureless regions of the data. In this case the algorithm converges slowly, finds the wrong pose, or even diverges. Normal space sampling, as proposed by Rusinkiewicz and Levoy, aims at constraining translational sliding of input meshes, generated from the point cloud [20]. Their algorithm tries to ensure that the normals of the selected points uniformly populate the sphere of directions. Covariance sampling as proposed by Levoy et al. extends the normal space approach. They identify whether a pair of meshes will be unstable in the ICP algorithms by estimating a covariance matrix from a sparse uniform sampling of the input [8].

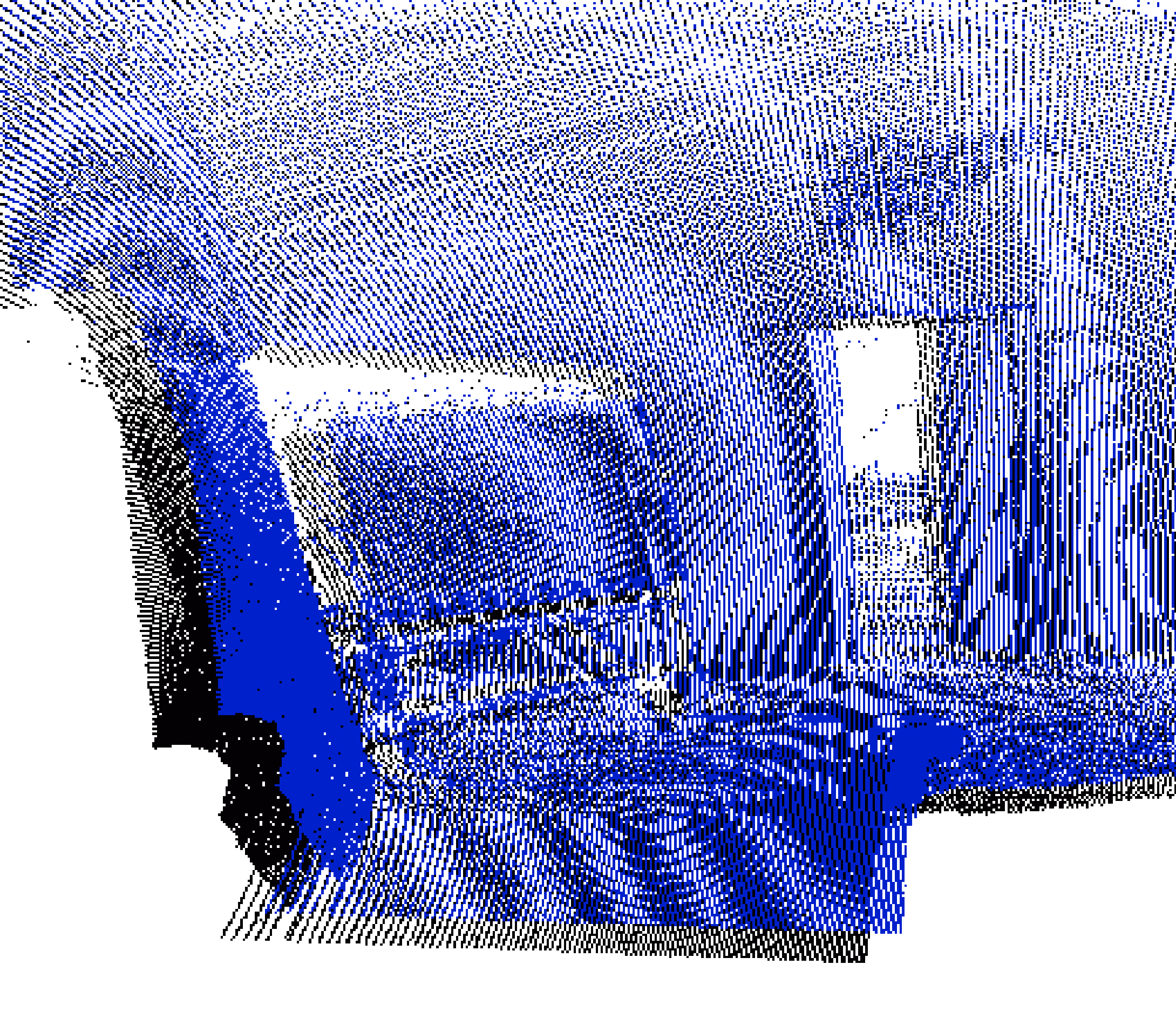

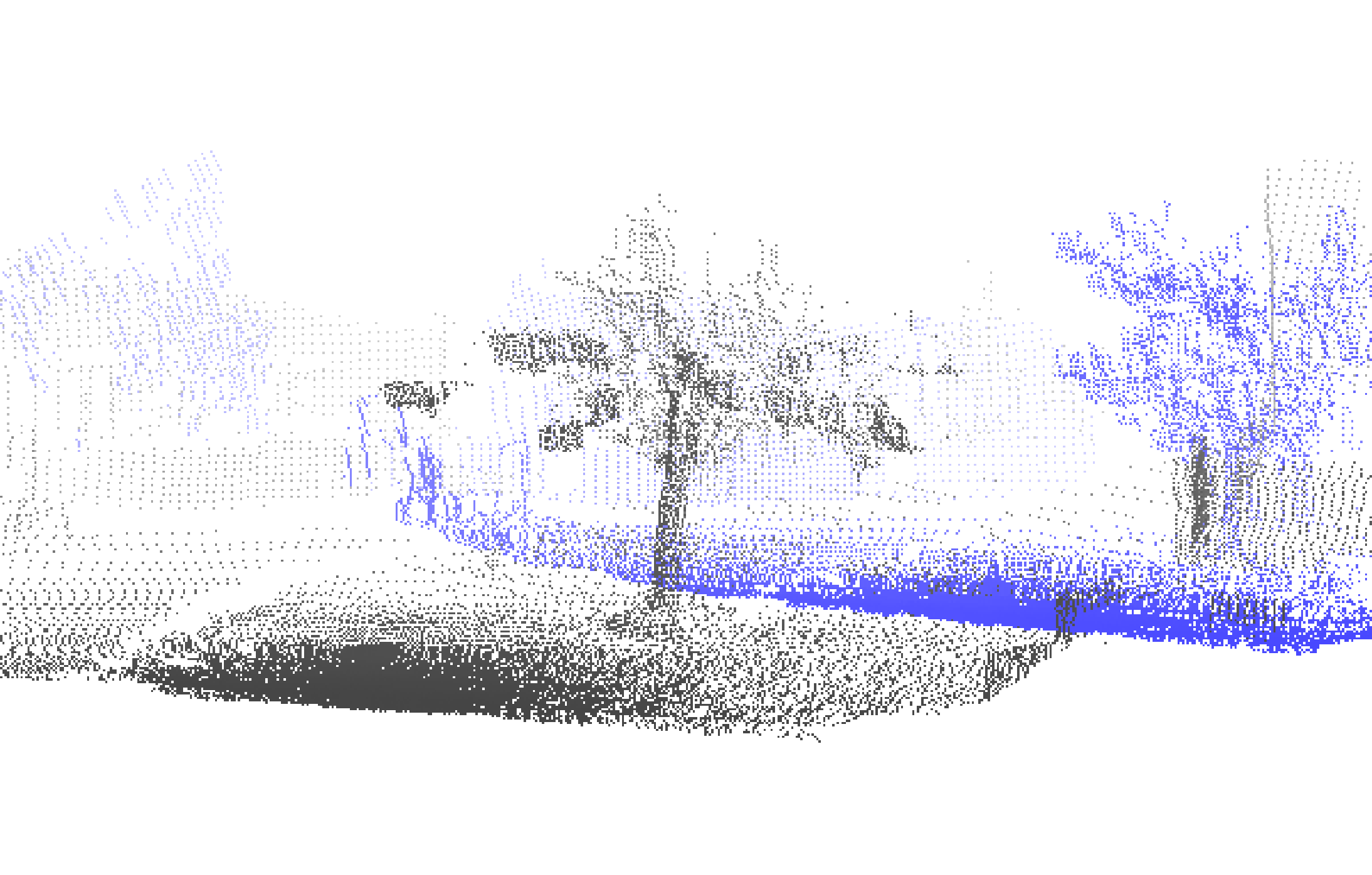

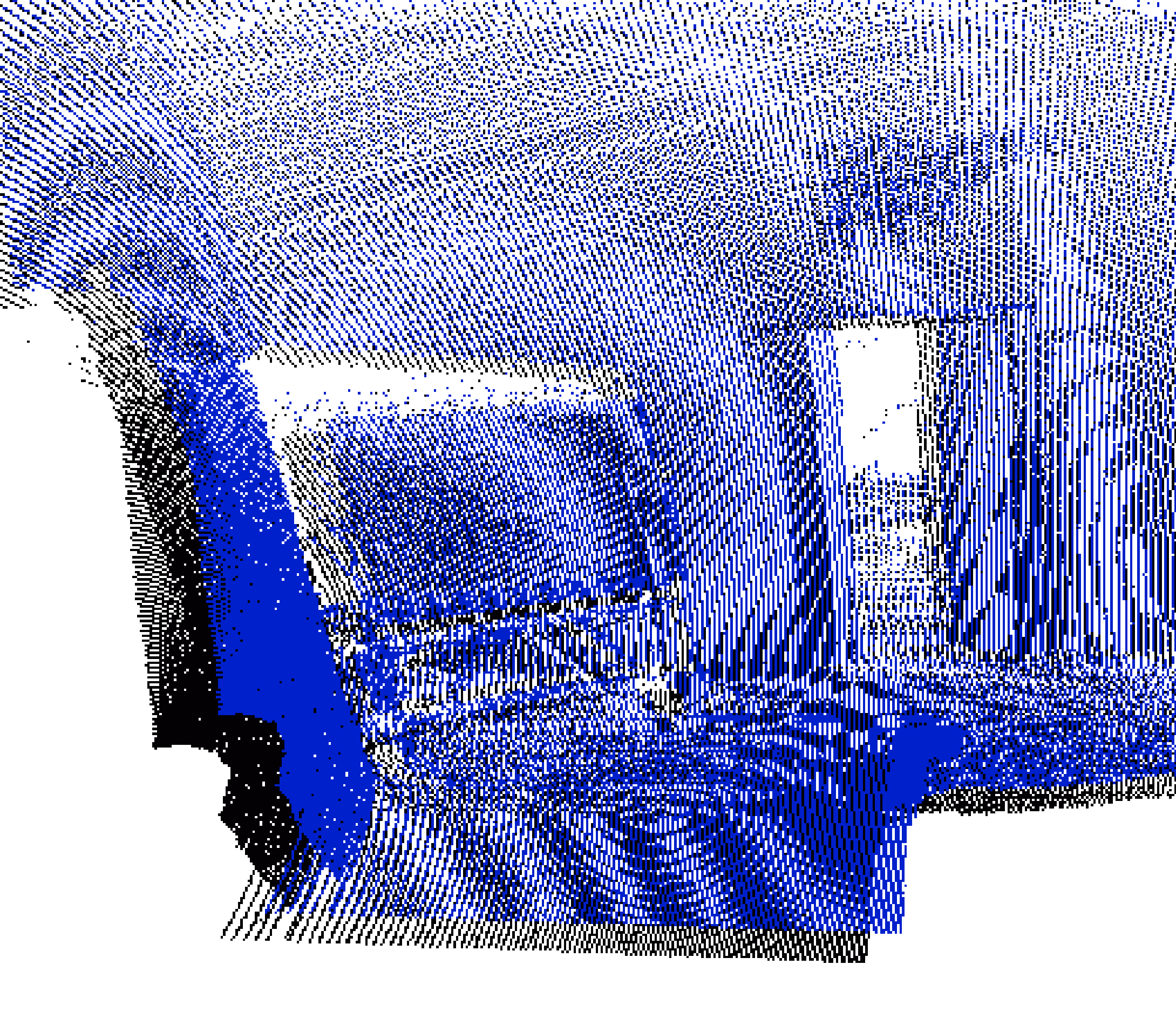

However, these state of the art ICP variants all assume that the input data is given as a mesh. In many application scenarios a mesh is not available, e.g., 3D data in robotics. Here, measurements contain in addition to Gaussian noise so called salt-and-pepper noise. Furthermore, in robotics the scenes are often sparsely sampled by the sensor. For these two reasons, simple meshing methods based on the topology of the acquired points cannot be applied and roboticists stick to using the raw point clouds. In this case the point-to-point metric, cf. Eq. (1), and closest point search have to be used. For computing closest points, k-d trees [7] are the standard search structure (see section 2.1 for a detailed description of k-d trees). Simon et al. obtained much speedup from a closest point cache that reduced the number of necessary k-d tree lookups [21].

|

Improving the speed of ICP algorithms received much attention recently. The developed wide variety of methods aim to increase the performance of computing corresponding points. Currently available methods include for example exploiting the triangle equation [11,9] and heuristics based on multi resolution [14].

Recently, Greenspan and Yurick used approximate k-d trees for ICP algorithms [10]. The idea behind this is to return as an approximate nearest neighbor the closest point in the bucket region where the query point lies. This value is determined from the depth-first search only, thus expensive ball-within-bounds tests and backtracking are not used [10]. Inspired by these ideas, the ICP algorithms has been evaluated in [17] using the approximate nearest neighbor search introduced by Arya et al. [2,3]: k-d trees empirically outperform bd-trees (box decomposition trees) with and without approximation. Approximation does not significantly deteriorate the quality of scan registration, but significantly increases the speed of scan matching [17].

The following section presents a novel method for computing exact closest points for ICP. It combines k-d trees with caching.