Next: The Cascade of Classifiers

Up: Object Classification

Previous: Feature Detection using Integral

The Gentle Ada Boost Algorithm is a variant of the powerful

boosting leaning technique [#!Freund_1996!#]. It is used to

select a set of simple features to achieve a given detection and

error rate. In the following, a detection is referred as hit and

an error as a false alarm. The various Ada Boost algorithms

differ in the update scheme of the weights. According to Lienhart

et al. the Gentle Ada Boost Algorithm is the most successful

learning procedure tested for face detection applications

[#!Lienhart_2003_1!#].

The learning is based on  weighted training examples

weighted training examples

, where

, where  are the images and

are the images and

the classified

output. At the beginning of the learning phase the weights

the classified

output. At the beginning of the learning phase the weights  are initialized with

are initialized with  . The following three steps are

repeated to select simple features until a given detection rate

. The following three steps are

repeated to select simple features until a given detection rate

is reached:

is reached:

- Every simple classifier, i.e., a single feature, is fit to the

data. Hereby the error

is calculated with respect to the

weights

is calculated with respect to the

weights  .

.

- The best feature classifier

is chosen for the

classification function. The counter

is chosen for the

classification function. The counter  is increased.

is increased.

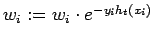

- The weights are updated with

and renormalized.

and renormalized.

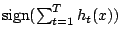

The final output of the classifier is

, with

, with  , if

, if

and

and  otherwise.

otherwise.  and

and  are the output of the

fitted simple feature classifiers, that depend on the assigned

weights, the expected error and the classifier size. Next, a

cascade based on these classifiers is built.

are the output of the

fitted simple feature classifiers, that depend on the assigned

weights, the expected error and the classifier size. Next, a

cascade based on these classifiers is built.

Next: The Cascade of Classifiers

Up: Object Classification

Previous: Feature Detection using Integral

root

2004-03-04

![]() weighted training examples

weighted training examples

![]() , where

, where ![]() are the images and

are the images and

![]() the classified

output. At the beginning of the learning phase the weights

the classified

output. At the beginning of the learning phase the weights ![]() are initialized with

are initialized with ![]() . The following three steps are

repeated to select simple features until a given detection rate

. The following three steps are

repeated to select simple features until a given detection rate

![]() is reached:

is reached:

![]() , with

, with ![]() , if

, if

![]() and

and ![]() otherwise.

otherwise. ![]() and

and ![]() are the output of the

fitted simple feature classifiers, that depend on the assigned

weights, the expected error and the classifier size. Next, a

cascade based on these classifiers is built.

are the output of the

fitted simple feature classifiers, that depend on the assigned

weights, the expected error and the classifier size. Next, a

cascade based on these classifiers is built.