Next: Learning Classification Functions

Up: Color-Independent Ball Classification

Previous: Color Invariance using Linear

There are many motivations for using features rather than pixels

directly. For mobile robots, a critical motivation is that

feature based systems operate much faster than pixel based

systems [22]. The features are called Haar-like,

since they follow the same structure as the Haar basis, i.e.,

step functions introduced by Alfred Haar to define wavelets.

They are also used

in [13,3,20,22].

Fig. 3 (right) shows the eleven basis features, i.e.,

edge, line, diagonal and center surround features. The base

resolution of the object detector is

pixels, thus,

the set of possible features in this area is very large (642592

features, see [13] for calculation details).

A single feature is effectively computed on input images

using integral images [22], also known as summed area

tables [13]. An integral image

pixels, thus,

the set of possible features in this area is very large (642592

features, see [13] for calculation details).

A single feature is effectively computed on input images

using integral images [22], also known as summed area

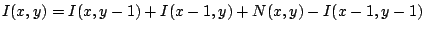

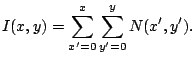

tables [13]. An integral image  is

an intermediate representation for the image and contains the sum of

gray scale pixel values of image

is

an intermediate representation for the image and contains the sum of

gray scale pixel values of image  with height

with height  and width

and width  ,

i.e.,

,

i.e.,

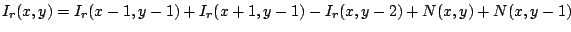

The integral image is computed recursively, by the formulas:

with

with

, therefore requiring only one scan over the input

data. This intermediate representation

, therefore requiring only one scan over the input

data. This intermediate representation  allows the computation

of a rectangle feature value at

allows the computation

of a rectangle feature value at  with height and width

with height and width  using four references (see Fig. 4 (left)):

using four references (see Fig. 4 (left)):

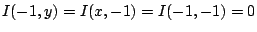

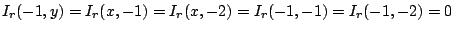

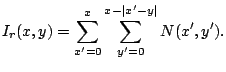

For the computation of the rotated features, Lienhart

et. al. introduced rotated summed area tables that contain the

sum of the pixels of the rectangle rotated by 45 with the

bottom-most corner at

with the

bottom-most corner at  and extending till the boundaries of the

image (see Fig. 4 (middle and right)) [13]:

and extending till the boundaries of the

image (see Fig. 4 (middle and right)) [13]:

The rotated integral image  is computed recursively, i.e.,

is computed recursively, i.e.,

using the start values

using the start values

. Four table lookups are required to

compute the pixel sum of any rotated rectangle with the formula:

. Four table lookups are required to

compute the pixel sum of any rotated rectangle with the formula:

Since the features are compositions of rectangles, they are

computed with several lookups and subtractions weighted with the area

of the black and white rectangles.

To detect a feature, a threshold is required. This threshold is

automatically determined during a fitting process, such that a minimum

number of examples are misclassified. Furthermore, the return

values

of the feature are determined, such that the

error on the examples is minimized. The examples are given in a set of

images that are classified as positive or negative samples. The set is

also used in the learning phase that is briefly described next.

of the feature are determined, such that the

error on the examples is minimized. The examples are given in a set of

images that are classified as positive or negative samples. The set is

also used in the learning phase that is briefly described next.

Next: Learning Classification Functions

Up: Color-Independent Ball Classification

Previous: Color Invariance using Linear

root

2005-01-27

![\includegraphics[width=26.5mm]{IntegralImage}](img70.png)

![\includegraphics[width=26.5mm]{IntegralImage}](img70.png)

![]() with the

bottom-most corner at

with the

bottom-most corner at ![]() and extending till the boundaries of the

image (see Fig. 4 (middle and right)) [13]:

and extending till the boundaries of the

image (see Fig. 4 (middle and right)) [13]:

![]() of the feature are determined, such that the

error on the examples is minimized. The examples are given in a set of

images that are classified as positive or negative samples. The set is

also used in the learning phase that is briefly described next.

of the feature are determined, such that the

error on the examples is minimized. The examples are given in a set of

images that are classified as positive or negative samples. The set is

also used in the learning phase that is briefly described next.