Another suitable optimization algorithm for eq. (3) is

the downhill simplex method as used by Cantzler et

al. [4]. A nondegenerate simplex is a

geometrical figure consisting of ![]() vertices in

vertices in ![]() dimensions, whereas the

dimensions, whereas the ![]() vertices span a

vertices span a ![]() -dimensional

vector space. Given an initial starting point

-dimensional

vector space. Given an initial starting point ![]() , the

starting simplex is computed through

, the

starting simplex is computed through

| (36) |

The downhill simplex method consists of a series of steps, i.e., reflections and contractions [14]. In a reflection step the algorithm moves the point of the simplex where the function is largest through the opposite face of the simplex to some lower point. If the algorithm reaches a ``valley floor'', the method contracts the simplex, i.e., the volume of the simplex decreases by moving one or several points, and moves along the valley [14].

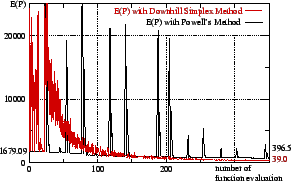

Figure 3 shows the minimization of eq. (3) with

the downhill simplex method in comparison with Powell's

method. The downhill simplex method performs worse during the

first steps, but reaches a better minimum than Powell's method. The

peaks in ![]() produced by Powell's method are the result of

search directions

produced by Powell's method are the result of

search directions ![]() cross a ``valley'' in combination with

large steps in Brent's line minimization algorithm.

cross a ``valley'' in combination with

large steps in Brent's line minimization algorithm.

|